Ethical Considerations and Regulatory Frameworks for AI in the Pharmaceutical Industry

Opinion - (2024) Volume 13, Issue 2

Introduction

Regulatory bodies must also ensure that AI systems undergo rigorous testing and validation before they are deployed in clinical settings. This includes evaluating the accuracy, reliability, and safety of AI algorithms, as well as their potential impact on patient care. Post-market surveillance is equally important to monitor AI systems’ performance and address any issues that arise during their use. Collaborative efforts between regulatory agencies, pharmaceutical companies, and AI developers are essential to create comprehensive and effective regulatory frameworks.

Description

One of the primary ethical concerns in the pharmaceutical industry is the potential for bias in AI algorithms. AI systems are trained on large datasets, and if these datasets are not representative of diverse populations, the resulting models can perpetuate and even exacerbate existing health disparities. For instance, if an AI system used for drug discovery or patient diagnosis is trained predominantly on data from a specific demographic, it may perform less effectively for underrepresented groups. This bias can lead to unequal access to new therapies and diagnostics, thereby reinforcing existing inequalities in healthcare. To mitigate this risk, it is crucial to ensure that training datasets are diverse and inclusive, representing various demographics, geographies, and disease states. Another significant ethical consideration is the transparency and explainability of AI systems. The complex and often opaque nature of AI algorithms, particularly deep learning models, can make it challenging to understand how decisions are made. This lack of transparency can hinder the ability of healthcare professionals to trust and effectively use AI tools. In the pharmaceutical industry, where decisions can directly impact patient health and safety, it is essential to develop AI systems that are not only accurate but also interpretable. Efforts should be made to implement explainable AI techniques that provide insights into the decision-making process of these models, enabling healthcare professionals to make informed decisions and fostering trust among patients and stakeholders. Data privacy and security are also paramount concerns in the context of AI in pharmaceuticals. The development and deployment of AI systems often require access to vast amounts of sensitive patient data. Ensuring the confidentiality and integrity of this data is critical to maintaining patient trust and complying with legal requirements. The pharmaceutical industry must adhere to stringent data protection regulations, such as the General Data Protection Regulation (GDPR) in Europe and the Health Insurance Portability and Accountability Act (HIPAA) in the United States. These regulations mandate robust data security measures, including encryption, anonymization, and secure data storage and transfer protocols. Companies must also establish clear data governance frameworks that define how data is collected, processed, and shared, ensuring that patient consent and privacy are upheld at all times. The use of AI in clinical trials presents additional ethical and regulatory challenges. AI has the potential to streamline the clinical trial process by identifying suitable candidates, predicting outcomes, and monitoring patient responses in realtime. However, the deployment of AI in this context raises concerns about informed consent, as participants may not fully understand the role of AI in the trial. Ensuring that participants are adequately informed about the use of AI and its implications is essential for maintaining ethical standards. Furthermore, regulatory agencies must establish clear guidelines for the validation and approval of AI-driven methodologies in clinical trials. This includes defining standards for algorithm performance, transparency, and accountability to ensure that AI technologies meet the rigorous requirements necessary for clinical use. The integration of AI into drug discovery and development also necessitates a careful consideration of intellectual property (IP) and ownership issues. AI systems can generate novel insights and compounds that may lead to new therapies. Determining the ownership of these discoveries, particularly when they are the result of collaborative efforts between humans and AI, can be complex. Clear IP frameworks must be established to address these challenges, ensuring that inventors, developers, and other stakeholders are fairly recognized and compensated for their contributions. The pharmaceutical industry must navigate a complex regulatory landscape as it integrates AI into its processes. Regulatory bodies such as the U.S. Food and Drug Administration (FDA) and the European Medicines Agency (EMA) are actively working to develop guidelines and frameworks for the use of AI in drug development and healthcare. These guidelines aim to ensure the safety, efficacy, and ethical use of AI technologies, addressing issues such as algorithm validation, data integrity, and post-market surveillance. Companies must stay abreast of evolving regulations and engage with regulatory agencies to ensure compliance and contribute to the development of robust regulatory frameworks.

Conclusion

In conclusion, while AI holds immense potential to revolutionize the pharmaceutical industry, its deployment must be carefully managed to address ethical considerations and regulatory challenges. Ensuring diversity and inclusivity in training data, enhancing transparency and explainability, safeguarding data privacy and security, addressing informed consent in clinical trials, clarifying IP ownership, and navigating regulatory frameworks are all critical to the responsible and ethical use of AI in this field.

Author Info

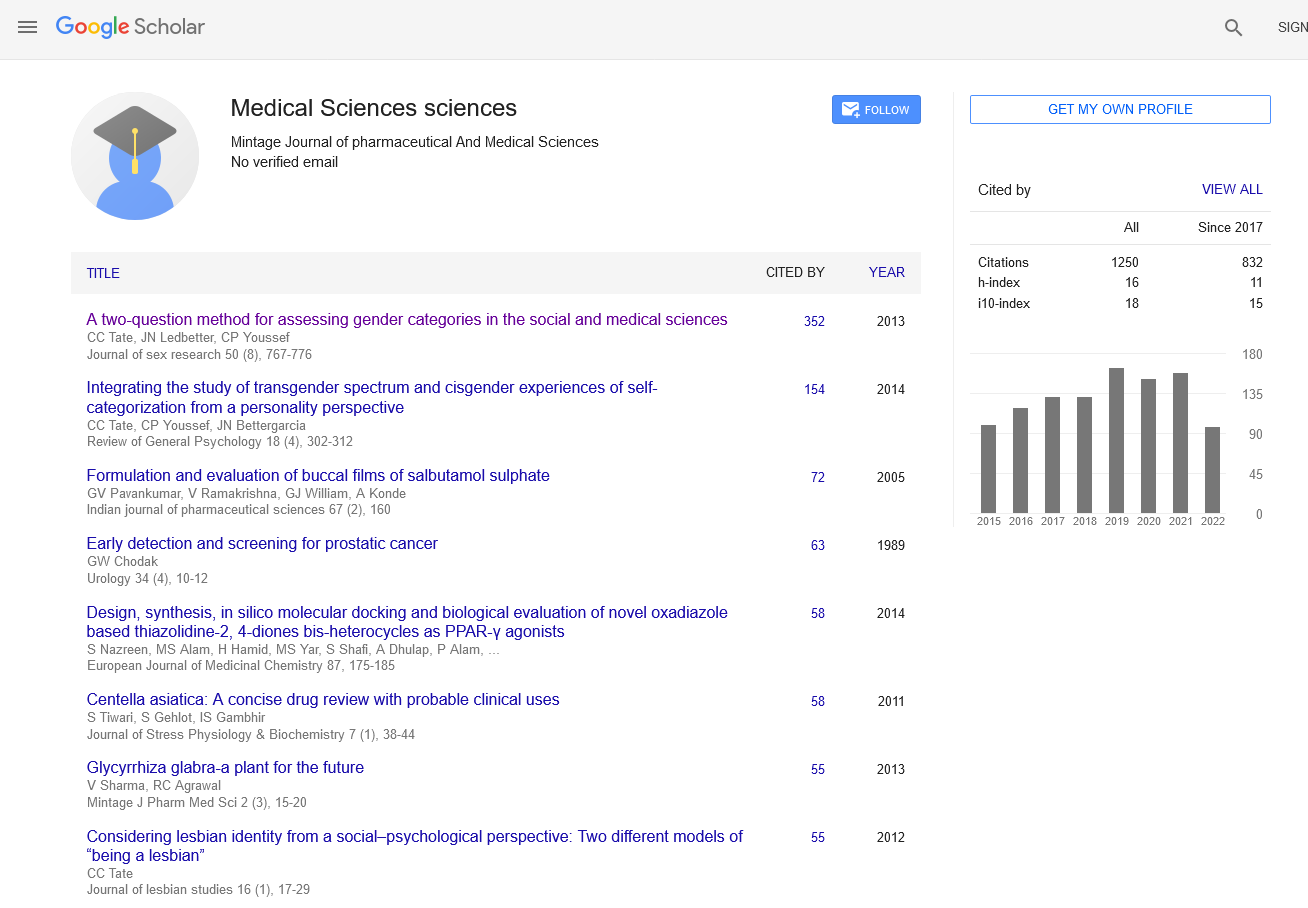

Sachiyo Nomura*Received: 29-May-2024, Manuscript No. mjpms-24-141869; , Pre QC No. mjpms-24-141869 (PQ); Editor assigned: 31-May-2024, Pre QC No. mjpms-24-141869 (PQ); Reviewed: 14-Jun-2024, QC No. mjpms-24-141869; Revised: 19-Jun-2024, Manuscript No. mjpms-24-141869 (R); Published: 26-Jun-2024, DOI: 10.4303/2320-3315/236017

Copyright: © 2024 This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

ISSN: 2320-3315

ICV :81.58